Navigating the Hyperreal: AI Faces, Bias Challenges, and Tools for a Diverse Future

In a world increasingly shaped by artificial intelligence, the line between reality and simulation blurs as technology advances. A recent study has unveiled a fascinating, and somewhat unsettling, revelation: AI-generated faces, particularly those of white individuals, are often perceived as more human than real faces. This phenomenon, termed “AI hyperrealism,” not only raises questions about the potential consequences in areas such as identity theft but also sheds light on the biases embedded in AI algorithms.

In the study conducted by researchers from Australia, the UK, and the Netherlands, participants were presented with images of white faces generated by AI and real white faces. Astonishingly, 66% of AI images were rated as human compared to 51% of real images. This surprising outcome, known as the Dunning-Kruger effect, suggests that those who made the most errors in distinguishing between AI and real faces were the most confident in their judgments.

Dr. Zak Witkower from the University of Amsterdam emphasizes the potential ramifications of this AI hyperrealism, especially in domains like online therapy and robotics. The study highlights the need for addressing biases in AI systems, as these hyperrealistic faces could inadvertently perpetuate social biases.

Beyond White Faces: The Complexities of Bias in AI

Interestingly, the hyperrealistic effect was not observed in images of people of color. The researchers speculate that this discrepancy may be attributed to the predominantly white dataset used to train the AI algorithm. This raises concerns about the perpetuation of racial biases and the potential impact on critical areas such as finding missing children, where AI-generated faces play a role.

This revelation aligns with broader discussions surrounding bias in AI systems. The second article delves into the own-race bias (ORB), a phenomenon where unfamiliar faces from other races are remembered less effectively. Surprisingly, growing up in a multiracial society does not eliminate ORB completely. The study suggests that exposure to other-race faces accounts for only a small part of ORB, emphasizing the need for a nuanced understanding of biases in AI.

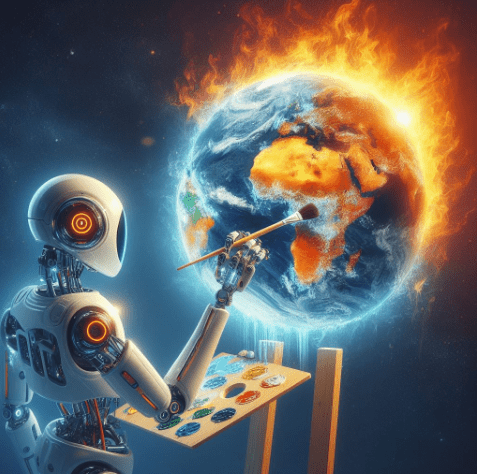

Moving beyond facial recognition, bias in computer vision systems is a pervasive issue. The third article discusses the challenges of measuring bias in computer vision accurately. Traditional methods, such as the Fitzpatrick scale, are one-dimensional and often fail to capture the complexity of human skin tones. Sony’s groundbreaking tool, expanding the scale into two dimensions, offers a more nuanced approach to measuring skin color and hue. Moreover, Meta introduces FACET, a novel way to measure fairness in computer vision models. This tool, using a diverse dataset of 32,000 human images, incorporates multiple fairness metrics and adds nuance to bias evaluation. The move towards geographically diverse annotators reflects a positive step in addressing the inherent subjectivity in evaluating biases.

As AI continues to evolve, it becomes imperative to address these biases ethically. Dr. Clare Sutherland, co-author of the AI hyperrealism study, emphasizes the critical role of debiasing AI algorithms to ensure fair and equitable outcomes. The tools presented by Sony and Meta provide practical avenues for developers to assess and improve the diversity of their datasets, fostering more inclusive AI models.

While these advancements mark progress in combating bias, it’s essential to maintain realistic expectations. As noted by Angelina Wang, a PhD researcher at Princeton, these tools will likely lead to incremental improvements rather than complete transformations in AI. However, the transparency and accessibility of these tools represent positive strides towards a more ethical and diverse future in artificial intelligence.

In conclusion, the convergence of AI hyperrealism, own-race bias, and evolving tools for measuring bias paints a complex picture of the intersection between technology and human perception. Navigating this landscape requires a commitment to ethical AI development, ongoing research, and the integration of diverse perspectives to ensure that the benefits of artificial intelligence are shared equitably across all communities.

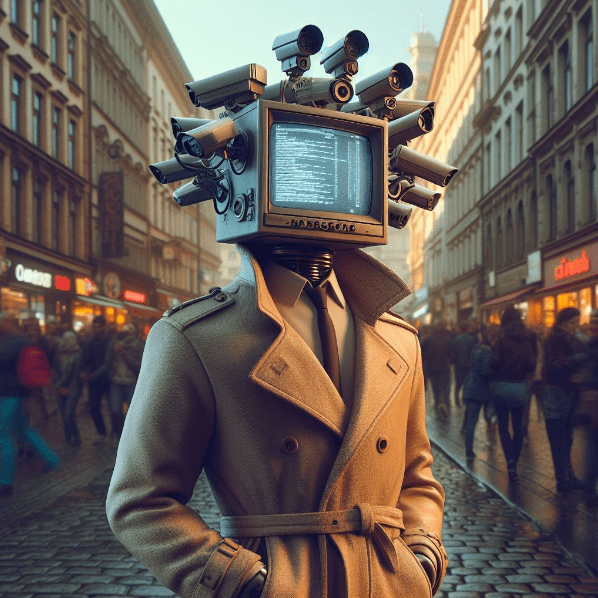

As we stand at the crossroads of technological innovation and societal impact, it’s crucial to anticipate the challenges that lie ahead. One pressing concern is the potential misuse of hyperrealistic AI faces in identity theft. The study’s findings suggest that the public may be susceptible to digital impostors, posing a significant risk to individuals and organizations alike.

Moreover, the revelation that biases in AI systems extend beyond facial recognition to impact various computer vision tasks raises questions about the broader implications of such biases. In the fourth article, the focus shifts to the biases present in computer vision systems, particularly in the accuracy of models when faced with images of individuals with darker skin tones. Traditional methods, such as the Fitzpatrick scale, are shown to be inadequate in capturing the diverse range of skin tones, prompting the development of more nuanced tools by Sony and Meta.

As the discussion around AI hyperrealism, own-race bias, and tools for measuring bias unfolds, there is a resounding call for ethical AI development. Dr. Clare Sutherland’s emphasis on debiasing AI algorithms serves as a rallying cry for developers, researchers, and industry leaders to prioritize fairness and equity in AI systems. The potential consequences of biased AI, from perpetuating social biases to compromising identity security, underscore the urgency of addressing these issues head-on.

In this exploration of AI hyperrealism, biases in facial recognition, and tools for measuring bias, we traverse a complex landscape where technology and humanity intersect. The journey from the hyperreal illusions of AI faces to the challenges of bias in computer vision unveils both the promise and perils of advancing technology. As we navigate this landscape, it is crucial to keep ethical considerations at the forefront, ensuring that the benefits of AI are accessible to all and that biases are actively identified, addressed, and rectified.

In the grand tapestry of artificial intelligence, our collective responsibility is to weave a future where innovation is synonymous with inclusivity, and where the potential of AI is harnessed for the betterment of society. The road ahead may be challenging, but with a commitment to ethical development and ongoing research, we can shape an AI landscape that reflects the rich diversity of the world it seeks to understand and improve. For all my daily news and tips on AI, Emerging technologies at the intersection of humans, just sign up for my FREE newsletter at www.robotpigeon.beehiiv.com