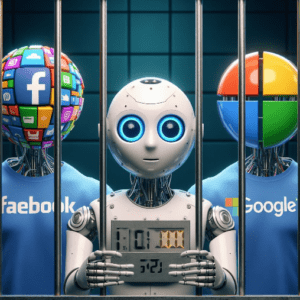

AI is Controlled and Owned by Big Tech

In the dynamic realm of artificial intelligence, recent events have thrust the influence of tech giants like Microsoft and Amazon into the spotlight. OpenAI, once viewed as a beacon of innovation and collaboration, witnessed a board breakdown that laid bare the intricate power dynamics shaping the future of AI. Simultaneously, Amazon’s multi-billion-dollar investment in Anthropic signals a deeper integration of cloud computing resources into the AI domain, raising questions about the evolving landscape and the imperative for transparent AI policies.

The recent turbulence at OpenAI, marked by the ousting and subsequent reinstatement of CEO Sam Altman, lays bare the extent of Big Tech’s sway over the AI narrative. Microsoft’s discreet but firm dominance in the “capped profit” entity reveals a stark reality: those with financial clout dictate the rules. This power play underscores the broader issue of concentrated power within the tech industry, posing threats not only to markets but also to democracy, culture, and individual agency.

OpenAI’s reliance on Microsoft’s computing infrastructure highlights the inescapable dependence of startups, new entrants, and AI research labs on industry behemoths. The narrative echoes the larger trend where many entities simply licence and rebrand AI models from tech giants, perpetuating a cycle of dominance. The concentration of power not only stifles innovation but also raises security concerns, as warned by Securities and Exchange Commission chair Gary Gensler.

The tentacles of Big Tech extend beyond the boardroom, shaping the very trajectory of AI development. With an increasing race to the bottom, dominant players release AI systems prematurely to retain their positions, impacting the industry’s ethical and developmental landscapes.

Shifting our gaze to Amazon, the recent announcement of a massive investment – up to $4 billion – in Anthropic reflects a growing trend in the tech industry. Amazon’s strategic move involves Anthropic becoming the “primary” cloud provider, utilising AWS for the majority of its AI model development. This partnership underscores the fusion of cloud computing resources with AI endeavours, positioning Amazon as a significant player in shaping the AI landscape.

Anthropic’s long-term commitment to offering its AI models to AWS customers reveals a symbiotic relationship, where AI innovation is fueled by the vast resources of the cloud giant. The intertwining of interests highlights a challenge for those seeking industry-independent AI development – the limited alternatives outside the orbit of major players like Microsoft, Google, and Amazon.

As these events unfold, it becomes increasingly apparent that the AI market lacks a clear business model outside of bolstering cloud profits for Big Tech. The challenges faced by OpenAI and the strategic moves by Amazon prompt a critical examination of the need for transparent and robust accountability regimes.

Government intervention is suggested as a solution, but historical examples show that regulatory efforts can inadvertently consolidate corporate power. Recent moves by Microsoft in the UK, seemingly tied to regulatory scrutiny, exemplify how tech giants wield economic and political influence to safeguard their interests. In the quest for ethical AI, there is a pressing need for aggressive transparency mandates. Opacity around crucial issues, such as the data AI companies access for training models, must be dispelled to ensure responsible AI development. Liability regimes should compel companies to meet baseline standards of privacy, security, and bias before releasing AI products to the public.

To address the concentration of power, bold regulation is called for – one that mandates business separation between different layers of the AI stack. This approach prevents Big Tech from leveraging infrastructure dominance to consolidate positions in the AI models and applications market. The events surrounding OpenAI and Amazon-Anthropic collaboration serve as a wake-up call. As governments grapple with emerging regulations, it is crucial to prioritise public interests over the narrow concerns of industry stakeholders. Meaningful and robust accountability regimes can redefine the trajectory of AI, ensuring that promises align with the greater good.

In a landscape where Big Tech manoeuvres to protect its market dominance, the need for an ethical AI blueprint becomes more urgent than ever. We must collectively shape an AI future that values transparency, accountability, and the interests of the public over corporate bottom lines. As the shadows of Big Tech influence loom large, the time to act is now. As we navigate the complex terrain of AI’s intersection with corporate interests, it becomes evident that the path forward requires collaborative governance. The AI community, policymakers, and the public must come together to shape policies that balance innovation with ethical considerations.

Aggressive transparency mandates should extend beyond data access to encompass the entire AI development lifecycle. Understanding the intricacies of AI models, their training data, and deployment strategies is vital for fostering trust in this rapidly evolving field.

Furthermore, liability regimes need to be robust, placing the onus on companies to prove their commitment to privacy, security, and bias standards. The era of releasing AI products without thorough scrutiny must come to an end, and companies should be held accountable for any adverse impacts on individuals or society.

The OpenAI-Microsoft saga serves as a cautionary tale, highlighting the potential pitfalls of regulatory frameworks that inadvertently empower large corporations. As we advocate for regulation, it’s crucial to learn from these events and craft frameworks that genuinely protect the public interest.

Regulators must be vigilant against attempts by tech giants to influence policy in their favour. Microsoft’s recent manoeuvres in the UK, strategically aligning with government ambitions, underscore the need for regulatory bodies to maintain independence and prioritise the concerns of all stakeholders, not just powerful corporations.

In the quest for a public-interest AI landscape, it’s imperative to reevaluate the existing business models that tie AI development to cloud profits. The industry must explore alternative avenues that prioritise ethical considerations over financial gains.

Open-source AI, often touted as a potential escape from industry concentration, faces its own set of challenges. The term itself is ill-defined and encompasses a spectrum of projects, some of which still operate under the influence of tech giants. The community must foster genuine independence, free from structural dependencies on major corporations.

While advocating for a regulatory framework, it’s crucial to recognize the delicate balance required. Governments must intervene to curb concentrated power and prevent anti competitive practices, but they should also avoid stifling innovation. Striking this balance necessitates ongoing collaboration between industry experts, policymakers, and advocacy groups.

The recent pledges by governments to regulate AI systems before public release are steps in the right direction. However, the effectiveness of these measures will depend on the agility of regulatory bodies to adapt to the ever-evolving landscape of AI technology.

As we reflect on the intricate dance between Big Tech, AI startups, and regulatory bodies, the need for collective action becomes apparent. Shaping an inclusive AI future requires collaboration, transparency, and a commitment to public interests.The OpenAI-Microsoft saga and Amazon’s investment in Anthropic serve as pivotal moments that demand our attention. It’s time to move beyond profit-centric approaches and focus on creating AI systems that prioritise the well-being of individuals and society.

In this era of unprecedented technological advancement, the choices we make today will shape the AI landscape for generations to come. Let us seize the opportunity to redefine the narrative, ensuring that AI serves as a force for good, guided by principles of transparency, accountability, and inclusivity. The journey is challenging, but the destination – an ethical and equitable AI future – is worth the collective effort. For all my daily news and tips on AI, Emerging technologies at the intersection of humans, just sign up for my FREE newsletter at www.robotpigeon.beehiiv.com