LangChain has emerged as a powerful framework for building advanced AI applications that can handle complex workflows with multiple components. Its ecosystem provides developers with the tools needed to create sophisticated, multi-step AI processes that combine language models with other services and data sources.

Key Highlights

Here are the main takeaways from the research:

- LangChain consists of three main products: LangChain (core framework), LangGraph (for state management), and LangSmith (for testing and monitoring).

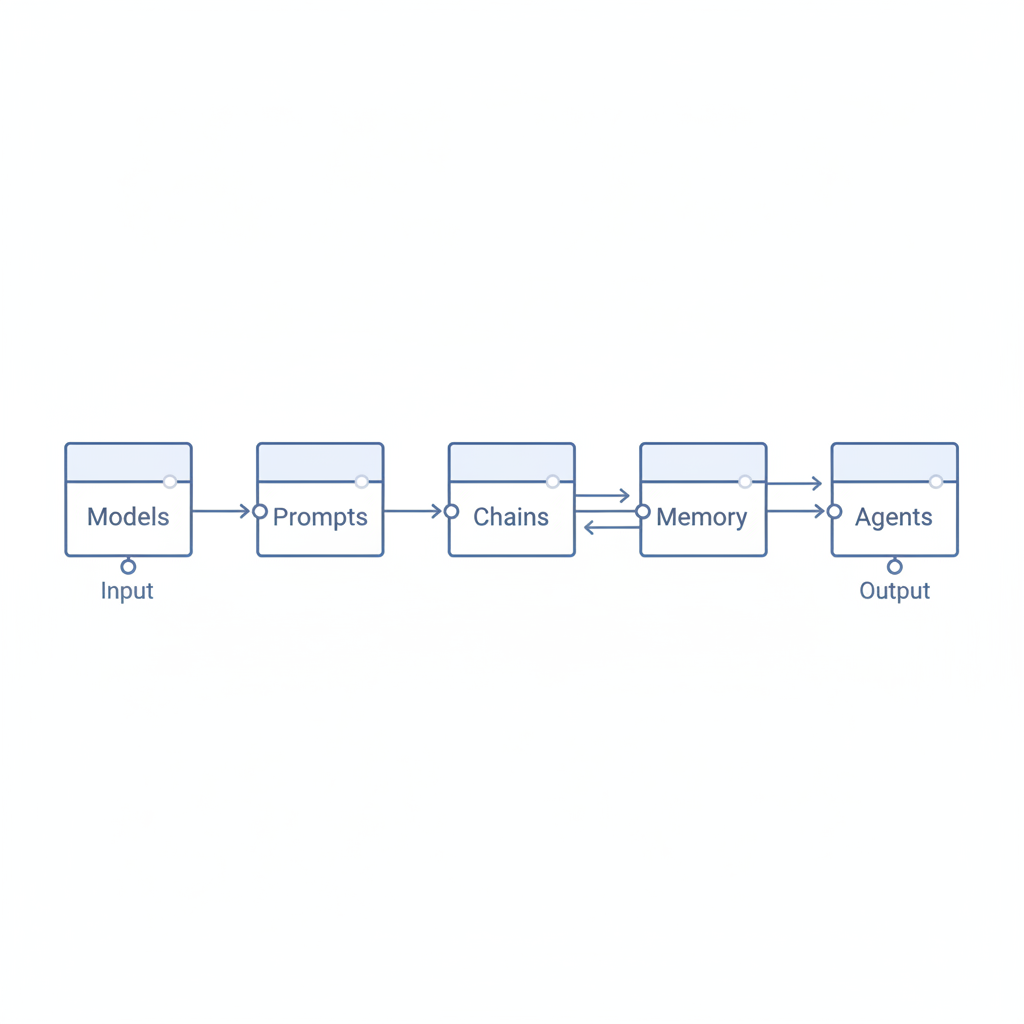

- The framework is built around five core abstractions: Models, Prompts, Chains, Memory, and Agents.

- LangChain Expression Language (LCEL) provides a declarative syntax for creating complex AI workflows without excessive boilerplate code.

- Advanced applications can implement multi-agent systems using different architectural patterns like Parallel, Sequential, or Hierarchical approaches.

- The ecosystem includes over 600 integrations and specialized tools for building production-ready AI applications.

Understanding the LangChain Ecosystem

LangChain provides a comprehensive framework designed specifically for developing applications powered by large language models (LLMs). The ecosystem consists of three main components: LangChain (the core framework providing building blocks and abstractions), LangGraph (for creating stateful, multi-step workflows), and LangSmith (for debugging, testing, and monitoring applications). Together, these tools enable developers to create complex AI workflows that were previously difficult to implement and maintain.

Core Abstractions and Architecture

At the heart of LangChain are five fundamental abstractions that form its architecture. Models represent the underlying language models like those from OpenAI or other providers. Prompts handle the templating and construction of inputs to these models. Chains combine multiple components into sequences of operations. Memory components store and retrieve conversation history or other contextual information. Agents can make decisions about which actions to take based on user input, effectively giving the AI a degree of autonomy in problem-solving.

Building Applications with LCEL

The LangChain Expression Language (LCEL) revolutionizes how developers construct AI applications by providing a declarative, composable approach to building workflows. LCEL introduces the Runnable interface, which standardizes interactions between components and makes them easily chainable. This approach significantly reduces boilerplate code and makes applications more maintainable and readable, even as complexity increases.

Advanced Orchestration Techniques

LangGraph extends LCEL’s capabilities by introducing sophisticated state management for complex workflows. It allows for conditional logic, dynamic routing, and persistent state across multiple interactions. Using concepts like state machines, developers can create applications that make different decisions based on context and previous interactions. This approach is particularly valuable for implementing conversational agents that can maintain coherent dialogues or solve multi-step problems that require different strategies depending on intermediate results.

Implementing Multi-Agent Systems

One of LangChain’s most powerful capabilities is its support for multi-agent systems where multiple specialized AI components collaborate to solve complex tasks. These systems can be arranged in various architectural patterns including parallel agents (multiple agents working simultaneously), sequential agents (agents working in a predefined order), or hierarchical systems (manager agents delegating to specialized workers). This approach mimics human team structures, with different chatbots specializing in distinct aspects of problem-solving.

Memory and Tool Integration

LangChain offers robust solutions for both short-term and long-term memory, enabling applications to maintain context across interactions. Short-term memory keeps track of recent exchanges, while long-term memory can store and retrieve information from vector databases for persistent knowledge. Additionally, LangChain makes it simple to integrate external tools and services through its @tool decorator. This allows developers to extend language model capabilities with specialized functions like web searching, calculation, or accessing Quillbot’s paraphrasing capabilities.

Production-Ready AI Applications

Deploying LangChain applications to production environments is streamlined through LangServe, which handles API creation, request throttling, and proper error handling. This service abstracts away much of the complexity involved in serving AI applications at scale. Configuration management allows for different settings across development, testing, and production environments, while LangSmith provides comprehensive monitoring and evaluation tools to ensure applications perform as expected in real-world scenarios.

Advanced RAG for Knowledge-Intensive Applications

Retrieval Augmented Generation (RAG) has become a cornerstone technique for enhancing language models with external knowledge. LangChain provides sophisticated RAG pipeline components that go far beyond basic implementations. Advanced techniques include conversational RAG (maintaining context across multiple queries), hybrid search (combining keyword and semantic search), and ensemble retrievers (using multiple retrieval strategies in parallel). These approaches are particularly valuable for applications requiring factual accuracy or domain-specific expertise, such as those aimed at approximating artificial general intelligence capabilities within specific domains.

Future Directions in LangChain Development

As LangChain continues to evolve, the focus is increasingly on enterprise-grade features and integration with emerging AI technologies. The framework is being developed to support more sophisticated agent architectures that can tackle increasingly complex problems through improved reasoning capabilities. This includes better integration with vector databases, support for multi-modal models, and more sophisticated planning algorithms.

Building for Scale and Security

The LangChain ecosystem is increasingly addressing enterprise requirements for security, compliance, and scalability. New features aim to provide better guardrails for AI applications, preventing harmful outputs while maintaining performance. The community and commercial support from Anthropic (creators of ChatGPT alternatives) and other organizations are helping to drive adoption in regulated industries where advanced safeguards are essential. These developments point toward a future where LangChain becomes the standard infrastructure for building trustworthy AI applications across various domains.

LangChain represents a significant advancement in how developers can build complex AI applications by providing the necessary abstractions and tools to manage sophisticated workflows. The framework bridges the gap between the raw capabilities of language models and the practical requirements of real-world applications that need to integrate with multiple data sources, services, and decision logic.

As AI systems continue to evolve, frameworks like LangChain will play an increasingly important role in making advanced capabilities accessible to developers while managing the complexity inherent in sophisticated AI applications.

Sources

LangChain Blog

LangChain Documentation

LangChain Dev Blog

DeepLearning.AI LangChain Course

LangChain GitHub Repository